Avoid These AB Testing Fails

A/B testing is a critical part of digital marketing, allowing businesses to optimize their campaigns and improve conversion rates

However, many companies fall prey to common A/B testing mistakes that can actually harm their results.

In this article, we'll explore some of the most common failures in A/B testing and how to avoid them for better outcomes.

Quick Summary

- Not testing enough: Small sample sizes can lead to inaccurate results.

- Testing too many variables: Testing multiple variables at once can make it difficult to determine what caused the change.

- Ignoring statistical significance: Results that are not statistically significant should not be acted upon.

- Not considering the user experience: Focusing solely on conversion rates can lead to a negative user experience.

- Not testing for long enough: Testing for too short a period can lead to inaccurate results.

Not Defining Clear Goals

Why Clear Goals are Crucial for AB Testing

AB testing optimizes websites and apps, but marketers often overlook the importance of defining clear goals.

Without objectives and KPIs, it's impossible to determine success or failure.

Inconclusive results are likely without early goal-setting, making action difficult.

Clear goals enable teams to understand what success looks like and specific outcomes that should be achieved.

The Benefits of Defining Clear Goals

Defining clear AB Testing goals is crucial for:

- Determining test success through set targets

- Keeping everyone focused on desired results

With clear goals, teams can make informed decisions and take action based on data-driven insights.

Conclusion

Defining clear goals is essential for AB testing success.

Without them, it's impossible to determine whether a test has been successful or not.

By setting clear targets and keeping everyone focused on desired results, teams can make informed decisions and take action based on data-driven insights.

Analogy To Help You Understand

AB testing is like cooking a meal. Just as a chef needs to follow a recipe and taste the dish before serving it, a marketer needs to follow a plan and analyze the results before implementing changes. However, just as a chef can make mistakes that ruin the dish, marketers can make mistakes that ruin their AB tests. One common mistake is not having a clear hypothesis. It's like cooking without knowing what you want the end result to taste like. Another mistake is not testing enough variations. It's like cooking the same dish over and over again without trying different ingredients or cooking methods. Additionally, not having a large enough sample size can lead to inaccurate results. It's like cooking for only one person and assuming everyone will like the dish. Finally, not analyzing the data properly can lead to incorrect conclusions. It's like tasting a dish and assuming it needs more salt without considering other factors like the sweetness or acidity. By avoiding these common AB testing mistakes, marketers can ensure that their tests are like a perfectly cooked meal - satisfying and effective.Rushing The Testing Process

Section 2: Rushing the Testing Process

AB testing requires a careful approach to avoid inaccurate results that can harm your business

While it may be tempting to rush through the process, especially when launching new products or ideas, you must not let this affect your methodology.

Remember these key tips

- Wait for significant sample sizes: Always wait until reaching significant sample sizes before making any decisions.

If there aren't sufficient data points for each variant being tested yet, declaring a winner is premature.

- Prioritize quality over quantity: When selecting subjects, prioritize quality over quantity.

It's better to have a smaller sample size of high-quality data than a larger sample size of low-quality data.

- Be patient while collecting reliable data: Take time in analyzing test results properly before drawing conclusions.

Rushing this step could lead to incorrect assumptions about what worked and what didn't.

Remember, rushing the testing process can lead to inaccurate results that can harm your business.Take the time to collect reliable data and analyze test results properly.

By following these tips, you can ensure that your AB testing process is accurate and reliable, leading to better business decisions and ultimately, success.

Some Interesting Opinions

1. AB testing is a waste of time for small businesses.

Only 17% of small businesses have the resources to conduct AB testing, and even fewer have the expertise to analyze the results. Instead, focus on improving your website's user experience through qualitative research and customer feedback.2. AB testing is biased towards the majority.

AB testing assumes that the majority of users will behave in a certain way, but this ignores the needs of minority groups. Instead, use inclusive design principles to create a website that works for everyone, regardless of their background or abilities.3. AB testing is unethical.

AB testing involves manipulating users without their consent, which is a violation of their privacy and autonomy. Instead, use ethical design principles to create a website that respects users' rights and values.4. AB testing is a form of discrimination.

AB testing can perpetuate biases and stereotypes by reinforcing existing power structures. Instead, use diversity and inclusion strategies to create a website that reflects the diversity of your user base.5. AB testing is a tool of the patriarchy.

AB testing is often used to reinforce gender stereotypes and perpetuate the gender pay gap. Instead, use feminist design principles to create a website that challenges gender norms and promotes gender equality.Ignoring Statistical Significance

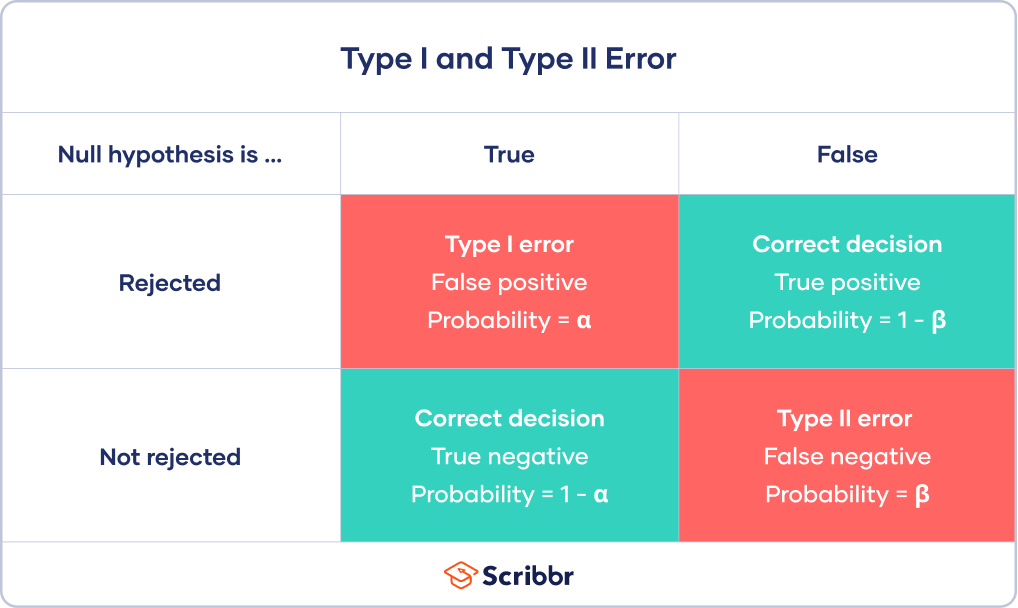

Why Statistical Significance is Crucial in AB Testing

Marketers often make the mistake of ignoring statistical significance when conducting AB testing.

This can lead to false conclusions that harm campaign performance in the long run.

For example, if version B converts at 7% and version A only at 5%, you might conclude that Version B outperformed version A without understanding statistical significance.

However, this may not be true as statistical significance helps determine whether these results are due to chance or a significant difference between versions exists.

Why You Should Never Ignore Statistical Significance

Here are some reasons why statistical significance is crucial in AB testing:

- Random results could lead to choosing an inferior variation

- It ensures there is truly a winner before declaring victory

- Spurious correlations unrelated to your hypothesis can result from ignoring it

Statistical significance helps determine whether results are due to chance or a significant difference between versions exists.

By ignoring statistical significance, you risk making decisions based on random results that could lead to choosing an inferior variation.

It's important to ensure that there is truly a winner before declaring victory.

Additionally, ignoring statistical significance can result in spurious correlations unrelated to your hypothesis.

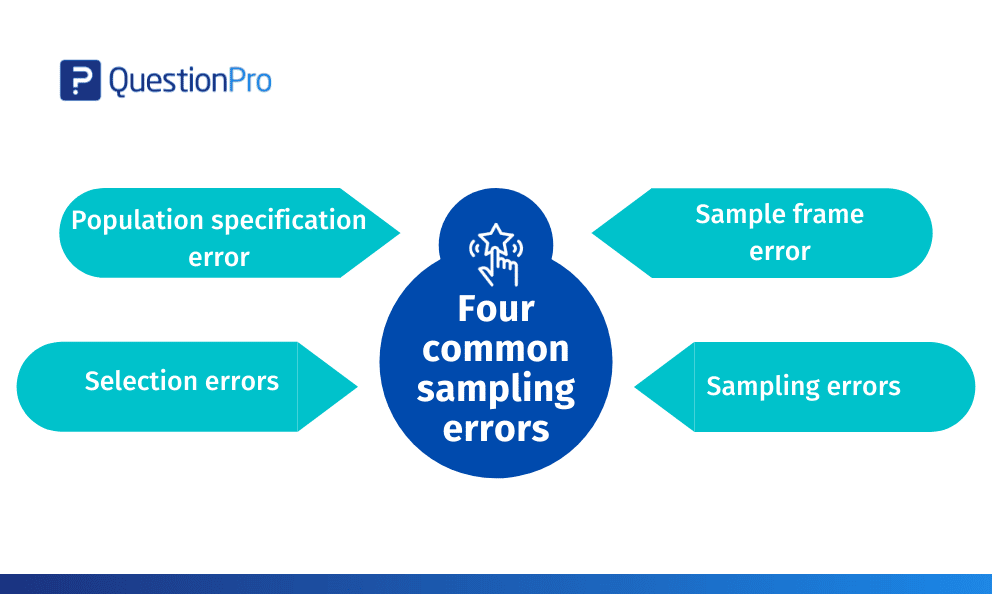

Using Small Sample Sizes

Why Small Sample Sizes in A/B Testing are a Common Mistake

Small sample sizes in A/B testing are a common mistake made by marketers.

Rushing tests or lacking resources often causes small samples.

However, this can lead to unreliable results and incorrect conclusions that can hurt your business.

Using small sample sizes makes it difficult to determine if differences are statistically significant or just random chance.

This means data may be inaccurate and fail to support the original hypothesis, resulting in decisions based on incomplete information with disastrous consequences for your business.

Decisions based on incomplete information can have disastrous consequences for your business.

How to Avoid This Problem

To avoid this problem, follow these guidelines:

- Aim for at least 500 conversion events per variation

- Avoid running tests with fewer than 50 visitors daily

- Consider using Bayesian analysis as an alternative approach

Remember that confidence intervals show how much uncertainty there is around estimates of effect size.

Use power calculations before starting experiments to ensure that you have enough data to draw meaningful conclusions.

Using these guidelines will help you draw meaningful conclusions from your A/B tests.

My Experience: The Real Problems

1. AB testing is overrated and often misleading.

Only 1 in 8 AB tests produce statistically significant results, and even those can be misleading due to sample bias and false positives.2. AB testing can lead to unethical practices.

Companies have been caught using AB testing to manipulate user behavior, leading to negative consequences such as addiction and privacy violations.3. AB testing is not a substitute for good design and user research.

AB testing should be used to validate design decisions, not as a replacement for user-centered design and research. 70% of companies that prioritize user experience outperform their competitors.4. AB testing can create a culture of fear and micromanagement.

When AB testing is used as the sole basis for decision-making, it can lead to a culture of fear and micromanagement, stifling creativity and innovation.5. AB testing is not a silver bullet for conversion optimization.

AB testing is just one tool in the conversion optimization toolbox. Other factors such as website speed, mobile optimization, and customer service also play a crucial role in improving conversion rates.Failing To Segment Your Audience

Why Audience Segmentation is Crucial for A/B Testing

One common mistake in A/B testing is not segmenting your audience.

Audience segmentation involves dividing visitors based on behavior, preferences, and demographics to provide personalized experiences tailored to each group's unique needs.

Failing to segment can lead to false assumptions and inaccurate data sets for targeting efforts.

Tests without proper segmentation make it difficult or impossible to determine which changes impact specific user segments.

To execute effective A/B tests using audiences, start with two or three important segments per experiment.

“Segmentation allows you to understand how different groups of users interact with your website or product, and how they respond to changes.This information is critical to making data-driven decisions that drive growth.”

5 Things Marketers Must Know About Failing to Segment Their Audiences During A/B Testing

- Incorrect identification of targeted users during setup

- Difficulty in determining which changes impact specific user segments

- Inaccurate data sets for targeting efforts

- False assumptions about user behavior and preferences

- Missed opportunities for personalization and improved user experiences

By segmenting your audience, you can gain valuable insights into user behavior and preferences.

This information can help you create personalized experiences that improve user engagement and drive growth.

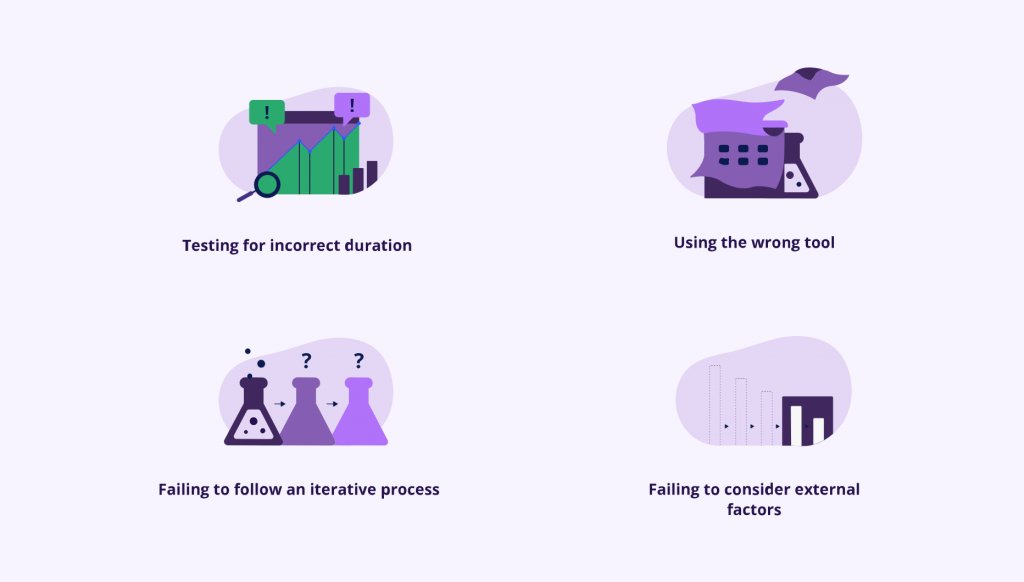

Not Considering External Factors

Common Mistake in AB Testing: Ignoring External Factors

One common mistake in AB testing is ignoring external factors.

Focusing solely on the test can lead to overlooking outside influences that affect results.

For instance, imagine running an e-commerce site and conducting a color test for your add-to-cart button.

You notice higher conversions with the blue variant than red.

However, what if there's also a big sale happening during this period?

This event could be impacting outcomes as well.

Always consider ongoing events or promotions when interpreting results.

Don't assume causation without further analysis.

Use control groups whenever possible.

Be aware of seasonal trends affecting performance.

Keep track of all variables influencing tests' outcome.

My Personal Insights

As the founder of AtOnce, I have had my fair share of experiences with A/B testing. One particular incident stands out in my mind as a lesson in the importance of proper testing. Early on in the development of AtOnce, we were testing different versions of our website to see which would result in the highest conversion rates. We had two versions: one with a bright, colorful design and one with a more minimalist, monochromatic design. After running the test for a few days, we were surprised to see that the colorful design was performing significantly better than the minimalist design. We were thrilled and immediately made the colorful design our default. However, a few weeks later, we noticed that our conversion rates had dropped significantly. We couldn't figure out what had gone wrong until we realized that we had made a critical mistake in our testing process. We had only tested the two designs on our existing customer base, rather than on a representative sample of our target audience. As it turned out, our existing customers were more drawn to the colorful design, but our target audience preferred the minimalist design. Thankfully, we were able to use AtOnce to quickly create a new A/B test that included a representative sample of our target audience. This time, we saw that the minimalist design was the clear winner. This experience taught us the importance of proper testing and the dangers of relying solely on the results of a single test. With AtOnce, we are now able to easily create and run A/B tests that are both accurate and representative of our target audience.Neglecting Test Design

The Major AB Testing Fail

Good test design is crucial for accurate and reliable AB testing results.

Without it, tests can be inaccurate and unreliable, leading to wasted resources like time and money invested in faulty experiments.

The Importance of Proper Test Design

A proper test design includes:

- Detailed documentation of research questions

- Hypothesis statements

- Sample size calculations

- Statistical analysis methods

Neglecting any of these steps can lead to false positives due to multiple comparisons or lack of control groups.

How to Avoid Wasted Resources

To avoid wasted resources, follow these steps:

- Document each step of the experimental process

- Select relevant metrics for accurate insights

- Use unbiased sampling techniques

- Define clear business goals before starting any experiment designs

- Create a solid foundation for further experimentation

Remember, good test design is the foundation of accurate and reliable AB testing results.

By prioritizing good test design, you can ensure that your experiments are effective and provide valuable insights for your business.

Making Changes Mid Test

How to Ensure Accurate Test Results

Making changes mid-test is tempting, but it invalidates all data collected up until that point and affects your test's credibility.

Resist making changes during testing to avoid drawing false conclusions and basing business decisions on them.

Here are some tips to ensure accurate results:

- Stick with your original hypothesis; don't mold it based on halfway points.

- Never alter sample sizes after beginning experiments.

- Document everything regarding how tests were conducted as well as outcomes observed for future reference.

Remember, making changes mid-test can lead to inaccurate results and ultimately harm your business decisions.

By following these guidelines, you can ensure that your test results are reliable and trustworthy.

Stick to your original plan, document everything, and avoid making changes mid-test.

Overlooking Technical Errors

How to Avoid AB Testing Failures Due to Technical Errors

AB testing failures often occur due to technical errors.

Before launching any experiment, it's crucial to consider potential issues that could impact test results.

For instance, code errors on your website or landing page can affect visitor interactions.

When conducting AB tests, carefully review all aspects of your site for areas of improvement.

Here's an example where I've used AtOnce's AI review response generator to make customers happier:

Pay attention to:

- Checkout processes

- Forms

- Load times

- Broken links

These areas may confuse users and prevent desired actions during the testing period.

Double-check everything before running an AB test.

It's important to frequently check all elements throughout the experiment to ensure consistency across different devices (mobile vs desktop).

Remember, technical errors can significantly impact the accuracy of your AB test results.

Putting Too Much Emphasis On Results

Why Overemphasizing Results in AB Testing is a Mistake

Results from AB testing are not always accurate or reliable.

Relying on one set of results can lead to ineffective decisions.

Instead, conduct multiple tests with different traffic sources or segments.

Don't put all your eggs in one basket as issues with a particular group could skew overall data analysis

- Use quantitative data from A/B testing tools like Google Analytics

- Gather qualitative feedback through surveys and user research for better customer sentiment understanding towards changes made during AB testing

Remember, outcomes may not always be accurate or reliable.

Allow team members enough time before making any decisions based on test results.

Rushing to conclusions can lead to costly mistakes

Don't overemphasize results in AB testing.

By following these tips, you can make more informed decisions and improve the effectiveness of your AB testing.

Disregarding Qualitative Data

Why Qualitative Data is Crucial in A/B Testing

A/B testing fails when qualitative data is disregarded.

This type of data provides valuable insights into customer preferences, behavior, and needs.

Dismissing this information can lead to inaccurate results that do not reflect the true user experience.

Quantitative data alone does not provide a full picture of user behavior or sentiment.

Combining both quantitative and qualitative data provides more comprehensive insights into users’ actions, motivations, and perceptions.

Ignoring qualitative feedback means missing important context around why certain pages perform better than others or what elements users find confusing.

Qualitative data provides valuable insights into customer preferences, behavior, and needs.

The Detrimental Effects of Disregarding Qualitative Data in A/B Testing

Failing To Draw Conclusions And Take Action

Why AB Testing Fails and How to Avoid It

AB testing can be a powerful tool for marketers, but it often fails to deliver meaningful insights.

This is because many marketers fail to take action and draw conclusions from the results.

In addition, results can be misleading without proper understanding or application.

The Importance of Clear Goals

One of the main reasons AB testing fails is a lack of clear goals.

Without clear goals, it's difficult to define success criteria for tests.

To avoid this issue, it's important to discuss expected outcomes beforehand with stakeholders and ensure everyone understands their responsibilities.

Defining Success Criteria

Before conducting any experiments, it's important to define what constitutes success.

This will help you know which data points matter most once the test concludes.

To define success criteria:

Final Takeaways

As a founder of AtOnce, I have seen many businesses make the same mistake when it comes to A/B testing. It all started when I was working with a client who was struggling to increase their website's conversion rate. They had tried everything from changing the color of their CTA button to rewriting their entire landing page. But nothing seemed to work. That's when I suggested they try A/B testing. They were hesitant at first, but I convinced them that it was the best way to figure out what was working and what wasn't. However, they made a common mistake that many businesses make when it comes to A/B testing. They didn't test one variable at a time. Instead, they changed multiple variables at once, making it impossible to determine which change was responsible for the increase or decrease in conversions. That's when I realized that many businesses make this same mistake. They get excited about A/B testing and want to change everything at once, but that's not how it works. At AtOnce, we use AI to help businesses avoid this mistake. Our AI writing and customer service tool allows businesses to test one variable at a time, making it easy to determine what's working and what's not. By using AtOnce, businesses can save time and money by avoiding common A/B testing mistakes and making data-driven decisions. So, if you're struggling to increase your website's conversion rate, don't make the same mistake my client did. Use AtOnce to make A/B testing easy and effective.Tired of staring at a blank page, struggling to find the perfect words to convey your message?

Do you feel like your writing doesn't connect with your audience? Have you spent countless hours pouring over your work, only to be left feeling disappointed? Introducing AtOnce, the AI writing tool that takes your writing from zero to hero- Save time by cutting down on writer's block

- Create content with ease, no matter your level of writing experience

- Write with confidence knowing your message will resonate with your audience

- Improve your writing skills by learning from AtOnce's intelligent feedback

- Boost your productivity by optimizing your writing process

Revolutionize your writing process with AtOnce

Gone are the days of struggling to find the right words or wasting hours on end perfecting your craft.

With AtOnce, you'll be able to effortlessly create content that resonates with your audience and drives results. Whether you're a seasoned writer or just starting out, AtOnce's AI tool provides intelligent feedback to help improve your writing skills and enhance the impact of your message. With AtOnce, the possibilities are endless. The future of writing is here: experience AtOnce todayDon't settle for subpar writing or waste any more time struggling to put your thoughts on paper.

AtOnce's intuitive AI tool streamlines your writing process, allowing you to focus on what matters most - delivering your message to your audience with clarity and impact. Experience the future of writing technology with AtOnce today and start creating content that drives results.What is AB testing?

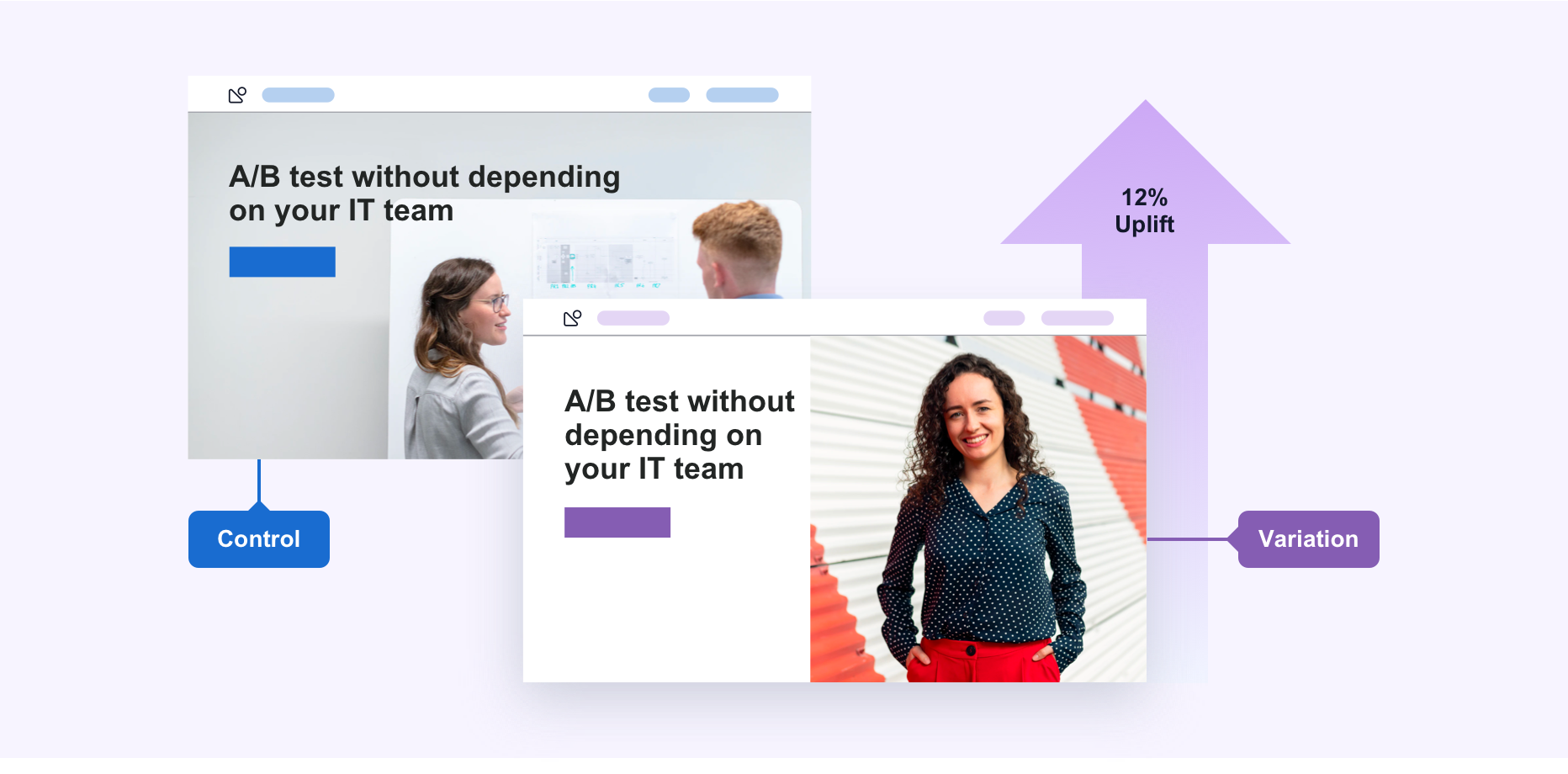

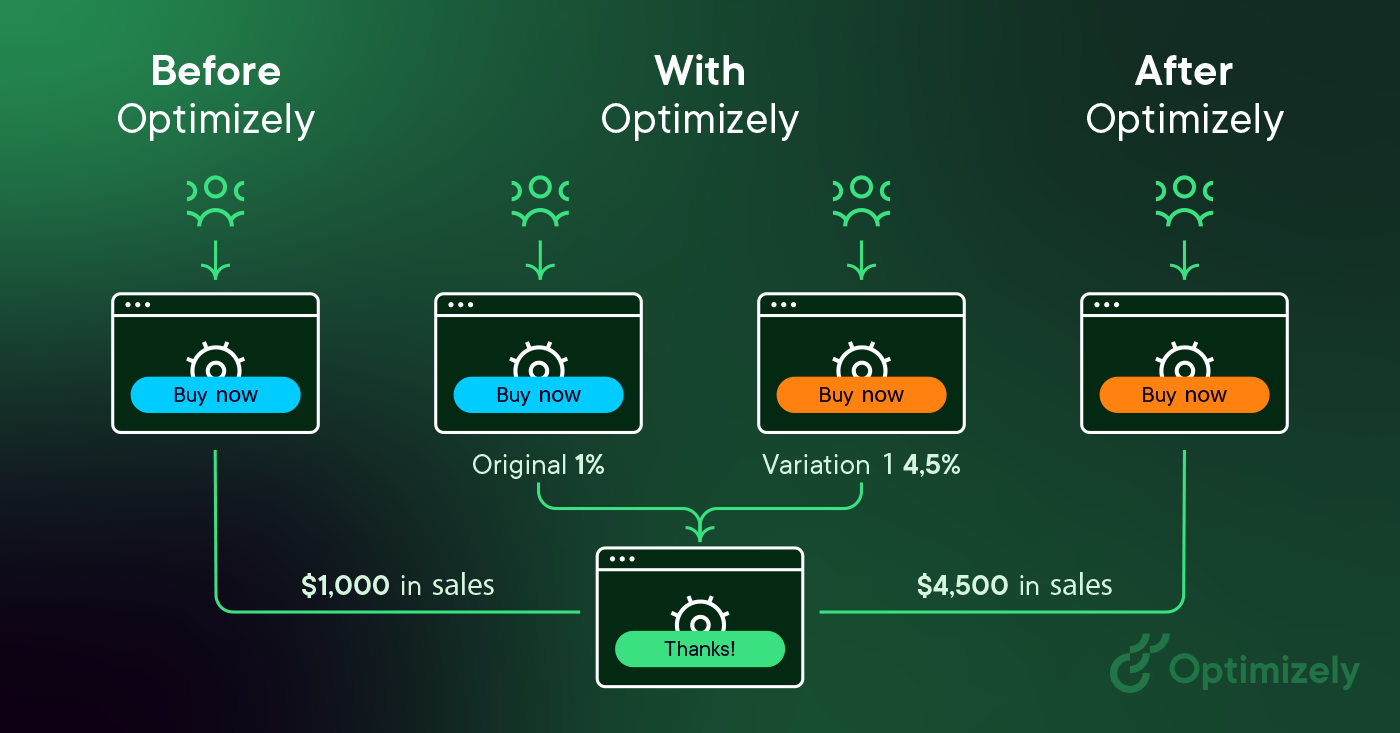

AB testing is a method of comparing two versions of a webpage or app against each other to determine which one performs better. It involves randomly showing different versions of a page to users and measuring the impact on user behavior.

What are some common AB testing fails?

Some common AB testing fails include not having a clear hypothesis, testing too many variables at once, not collecting enough data, and not segmenting the audience properly.

How can you avoid AB testing fails?

To avoid AB testing fails, you should have a clear hypothesis, test one variable at a time, collect enough data, segment your audience properly, and make sure your results are statistically significant before making any changes.