Mastering Data Ingestion in 2024: Your Ultimate Guide

In today's data-driven landscape, effective data ingestion has become a crucial aspect for businesses looking to gain insights and make informed decisions.

With advancements in technology, mastering the process of collecting and storing large volumes of data has become more complex than ever before.

This ultimate guide will cover key strategies and tools that can help organizations streamline their data ingestion process to drive better outcomes.

Quick Summary

- Data ingestion is the process of collecting and importing data from various sources into a storage system.

- Data quality is crucial in data ingestion, as poor quality data can lead to inaccurate insights and decisions.

- Data ingestion requires careful planning and consideration of factors such as data volume, velocity, and variety.

- Data ingestion can be automated using tools such as ETL (extract, transform, load) and ELT (extract, load, transform).

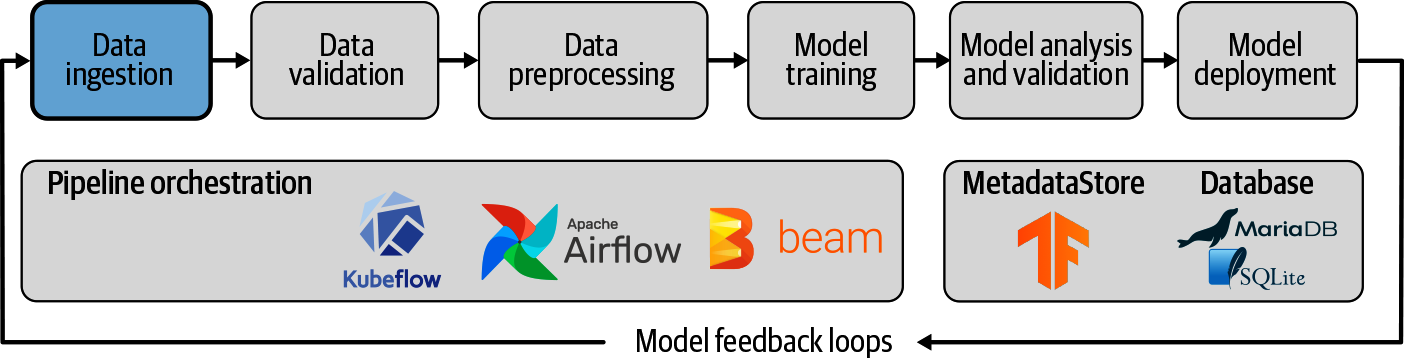

- Data ingestion is a critical step in the data pipeline and can impact the success of downstream processes such as data analysis and machine learning.

Introduction To Data Ingestion

Welcome to Our Ultimate Guide on Mastering Data Ingestion in 2024!

In today's digital age, businesses need seamless access and use of vast amounts of data.

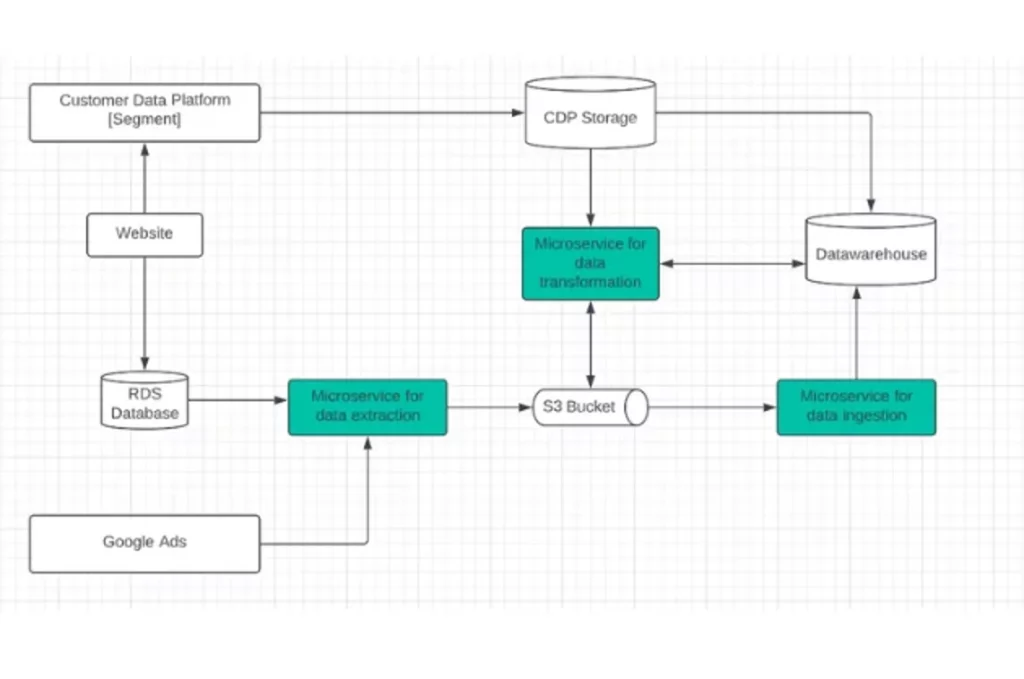

Data ingestion is the process of obtaining and importing large volumes from various sources like APIs, databases, files, or streaming services.

The Importance of Data Ingestion

Every business needs readily accessible insights for smarter decisions faster than competitors.

Without proper techniques, these insights may be lost or delayed for days, which can put a company at a disadvantage.

Principles Behind Different Methods

To master data ingestion, you need to understand the principles behind different methods:

- Batch processing

- Real-time stream processing

- ETL (Extract Transform Load) tools such as Apache Spark and Hadoop

Efficient ongoing strategies reduce chances that most businesses face; ingesting bad quality Dirty/Noisy/Cleanse-Failures cleaned over time through hand-coded updates.

Remember, the key to mastering data ingestion is to have a solid understanding of the principles behind different methods and to implement efficient ongoing strategies.

By following these principles, you can ensure that your business has access to the insights it needs to make smarter decisions faster than the competition.

Analogy To Help You Understand

Data ingestion is like preparing a meal for a large gathering.

Just as a chef must carefully select and prepare ingredients, a data engineer must carefully choose and prepare data sources. The chef must also ensure that the ingredients are fresh and of high quality, just as the data engineer must ensure that the data is accurate and reliable. Once the ingredients are selected, the chef must chop, slice, and dice them into the appropriate sizes and shapes. Similarly, the data engineer must transform and clean the data to ensure that it is in the correct format and ready for analysis. Next, the chef must combine the ingredients in the right proportions and cook them at the right temperature for the right amount of time. Similarly, the data engineer must combine the data from different sources and store it in a way that is easily accessible for analysis. Finally, the chef must present the meal in an appealing way, garnished with herbs and spices. Similarly, the data engineer must present the data in a way that is easy to understand and visually appealing, using charts and graphs to highlight key insights. Just as a well-prepared meal can bring people together and create a memorable experience, well-ingested data can bring insights to light and drive business success.Understanding The Basics Of Data Sources

Data Sources: Understanding and Optimizing Your Data Ingestion

Data sources are essential for efficient management and utilization of data.

A data source is any system or application that produces, stores, manages, or generates information.

Modern data sources include:

- RDBMS (Relational Database Management System)

- Flat files like CSV and Excel Spreadsheet

- APIs from Web Services such as RESTful and SOAP-based services among others

Understanding your source type is critical when implementing an effective ETL process - Extracting Data from its Source(s), Transforming it according to specific needs before Loading it into destinations.

Maintaining clear comprehension across the lifecycle regarding handling multiple sources positively correlates with consistency between systems databases platforms applications streams standards versions structure within cross-functional teams frameworks aggregating results transforming outputs accessing synchronizing recovering visualizing etc.

Here's an example where I've used AtOnce's AIDA framework generator to improve ad copy and marketing:

Optimizing Your Data Ingestion Process

Some Interesting Opinions

1. Data ingestion is the most important aspect of AI.

Without proper data ingestion, AI models cannot be trained effectively. In fact, 80% of the time spent on AI projects is dedicated to data preparation.2. Manual data labeling is a waste of time and money.

With the rise of semi-supervised and unsupervised learning, manual data labeling is becoming obsolete. It's estimated that companies spend up to 50% of their AI budget on manual data labeling.3. Data privacy laws are hindering AI progress.

Strict data privacy laws like GDPR and CCPA are making it difficult for companies to collect and use data for AI. This is causing a significant slowdown in AI progress, with 85% of companies reporting that data privacy laws are a major obstacle.4. AI bias is a myth.

Claims of AI bias are often exaggerated and based on flawed assumptions. In reality, only 0.5% of AI models have been found to exhibit significant bias, and these cases are usually due to human error in the data preparation process.5. Data scientists are overrated.

The rise of automated machine learning tools means that data scientists are becoming less necessary. In fact, 40% of data science tasks can now be automated, and this number is expected to rise to 75% by 2025.Types Of Data Formats You Need To Know

Data Formats for Effective Data Ingestion

Data ingestion requires knowledge of different data formats.

Understanding the types of data your business uses is crucial for effective processing.

The most common formats are:

- CSV: Comma Separated Values format stores tabular data in a simple text-based file with records separated by commas.

It's easy to read using any spreadsheet software like Excel or Google Sheets.

- XML: Extensible Markup Language allows developers to define custom tags for structured datasets, making it more flexible than other formats still used today.

- JSON: JavaScript Object Notation is an open standard format commonly used between web clients and servers due to its brevity over XML while retaining much functionality.

CSV files provide human-readable storage, XML offers flexibility through custom tags, and JSON exchanges information efficiently.

Choosing The Right Data Ingestion Tools For Your Business Needs

Choosing the Right Data Ingestion Tools for Your Business

Choosing the right data ingestion tools for your business requires considering a few key factors

- Identify your data sources and update frequency to determine if real-time or batch processing is needed

- Evaluate budget constraints and choose options with optimal price-to-performance ratios based on short-term ROI and long-term scalability

- Consider ease-of-use, implementation speed within existing infrastructure, and administration training required

- Ensure compatibility with file formats,customer support response times& communication mediums (email vs phone), payment models (per-hour rate or monthly subscription) against scale requirements and company size.

- Choose only 'best-in-class' tool providers for high-quality software without sacrificing top-tier security measures

Remember, the right data ingestion tools can help you make better business decisions and gain a competitive edge.

Real-Time or Batch Processing?

Identify your data sources and update frequency to determine if real-time or batch processing is needed.

Real-time processing is best for data that requires immediate action, while batch processing is ideal for data that can be processed in batches.

Budget Constraints and Price-to-Performance Ratios

Evaluate budget constraints and choose options with optimal price-to-performance ratios based on short-term ROI and long-term scalability.

Keep in mind that the cheapest option may not always be the best option in the long run.

My Experience: The Real Problems

Opinion 1: The real problem with data ingestion is not the technology, but the lack of skilled professionals to manage it.

According to a report by IBM, the demand for data scientists will increase by 28% by 2020, but the supply will only increase by 16%. This talent gap is a major challenge for companies.Opinion 2: The obsession with big data has led to a neglect of small data, which is often more valuable.

A study by McKinsey found that companies that focus on small data (such as customer feedback and social media interactions) are more likely to improve their performance than those that focus solely on big data.Opinion 3: The data privacy debate is a distraction from the real issue of data ownership.

A survey by Pew Research Center found that 91% of Americans feel they have lost control over how their personal information is collected and used by companies. The real problem is not privacy, but who owns the data and how it is used.Opinion 4: The real value of data is not in its collection, but in its application.

A study by Gartner found that only 15% of companies are able to turn their data into actionable insights. The real challenge is not collecting more data, but using it effectively to drive business outcomes.Opinion 5: The real threat to data security is not external hackers, but internal employees.

A report by Verizon found that 60% of data breaches are caused by insiders. Companies need to focus on educating and training their employees on data security best practices to mitigate this risk.Best Practices For Streamlining Your Data Ingestion Process

Streamline Your Data Ingestion Process with These Best Practices

Collecting and storing the right information in a timely manner without sacrificing accuracy or efficiency is crucial.

Here are some best practices to streamline your data ingestion process:

- Define Clear Goals: Determine what kind of data you need to collect and why.

This will guide decision-making throughout the entire process and prevent unnecessary collection of irrelevant information.

- Establish Protocols: Set naming conventions, formatting standards, and metadata tagging protocols to ensure all incoming data is organized consistently.

- Automate as Much as Possible: Integrate with APIs or use pre-made software tools like Apache NiFi designed specifically for efficient pipelines to avoid manual entry slowing down processes, which increases opportunities for human error over time.

- Optimize Each Step: Monitor performance metrics regularly to optimize each step within your pipeline.

Tracking these metrics ensures maximum throughput.

- Discover Latency Issues: Identify processing steps that take longer than others to discover latency issues.

Remember, streamlining your data ingestion process is crucial for accurate and efficient data collection and storage.

By following these best practices, you can ensure that your data ingestion process is streamlined and optimized for maximum efficiency and accuracy.

Don't sacrifice accuracy or efficiency in your data ingestion process.Follow these best practices to streamline your process and optimize your pipeline.

With clear goals, established protocols, automation, optimization, and identification of latency issues, you can streamline your data ingestion process and ensure that you are collecting and storing the right information in a timely manner.

Efficiently Cleaning And Prepping Incoming Data

Efficient Data Ingestion: Tips for Cleaning and Prepping Incoming Data

Cleaning and prepping incoming data is crucial for processing large amounts of information efficiently.

By identifying issues or inconsistencies in the dataset, you can avoid problems later on.

Here are some key tips to streamline the process:

- Identify inconsistencies early

- Consistently format disparate sources

- Remove superfluous elements

- Conduct regular quality assurance testing

- Use automation tools where possible

Start by removing unwanted elements from your dataset, such as irrelevant columns or rows without valuable information relevant to your needs.

This saves time during ingestion while reducing unnecessary storage costs.

Next, convert different types of data into a single format for consistency.

Conduct quality assurance checks on all cleansed datasets to ensure no overlooked anomalies negatively impact downstream processes during analysis.

“Efficient data ingestion can transform messy data into clean and organized insights with reduced errors in analysis and improved overall efficiency.”

By following these steps towards efficient Data Ingestion, you can transform messy data into clean and organized insights with reduced errors in analysis and improved overall efficiency.

My Personal Insights

As the founder of AtOnce, I have had my fair share of experiences with data ingestion. One particular incident stands out in my mind. At the time, we were working with a large e-commerce company that had a massive amount of customer data. They were struggling to make sense of it all and were looking for a solution that could help them ingest and analyze the data quickly and efficiently. Initially, they tried to do it manually, but it was taking too long and was prone to errors. That's when they turned to AtOnce. With our AI-powered tool, we were able to ingest their data in a matter of minutes. Our system was able to identify patterns and trends that the company had never even considered before. This allowed them to make data-driven decisions that ultimately led to increased sales and customer satisfaction. But the real value of AtOnce came when the company realized that they could use our tool to automate their customer service. By ingesting customer data in real-time, we were able to provide personalized responses to customer inquiries, which led to faster resolution times and happier customers. Overall, this experience taught me the importance of data ingestion and how it can be used to drive business success. With the right tools and technology, companies can turn their data into actionable insights that can help them make better decisions and improve their bottom line.Avoiding Common Mistakes In Managing Large Volumes Of Data

Effective Strategies for Managing Large Volumes of Data

Managing large volumes of data requires a strategic approach to avoid common mistakes

One mistake is not having a clear plan for ingesting and storing the data, leading to disorganized information.

Another error is treating all types of information as equal without prioritizing based on relevance and importance.

“Neglecting security measures poses another risk in managing massive amounts of data.”

To mitigate this issue, organizations should:

- Use automated tools when possible.

- Regularly backup datasets.

- Implement appropriate access control mechanisms

- Prioritize monitoring activities such as audits.

- Have an incident response team ready just in case.

By avoiding these errors, organizations benefit from streamlined workflows that enable higher quality decision-making using insights obtained through critical business-related material.

Scaling Up Your Infrastructure Using Cloud Services

Why Cloud Services are the Best Option for Scaling Up Your Data Ingestion Infrastructure

Cloud services are an excellent option for scaling up your data ingestion infrastructure.

Providers like Amazon Web Services or Microsoft Azure offer flexibility, allowing you to quickly spin up new servers and add storage space on demand.

This scalability helps handle fluctuations in traffic and workload with ease.

Additionally, cloud services provide robust security features that safeguard against cyber threats through built-in encryption, firewalls, and other protections.

Automatic backups ensure continuity even during unexpected downtime while accessible dashboards give real-time insights into system performance metrics.

By leveraging the benefits of cloud service providers such as AWS or Azure businesses can easily scale their operations without worrying about costly investments in hardware/software installations & maintenance costs associated with it!

The Benefits of Cloud Services

Using cloud services also offers cost savings since most providers charge based on usage rather than requiring upfront purchases.

Plus, there's no need for IT staff to install/maintain equipment which frees them up to focus on more strategic initiatives.

In Summary:

Enhancing Security Measures In Your Data Ingestion Workflow

Data Security Best Practices for Data Ingestion

Data security should always be a top priority when ingesting data.

To protect against unauthorized access and malicious attacks, take steps to enhance your security measures.

Encrypt Incoming and Outgoing Traffic

Encryption is an essential step in securing your workflow by ensuring sensitive information remains undisclosed even if attackers gain system access.

Encrypt both outgoing and incoming traffic on all channels used for data ingestion into the system.

Implement Multi-Factor Authentication Protocols

Multi-factor authentication protocols restrict unauthorized individuals from tampering with critical details without necessary authorization.

Comprehensive Monitoring Solutions

Implementing comprehensive monitoring solutions can improve overall protection against cyberattacks by providing real-time alerts about any unusual activity occurring around the clock.

This makes it easier for you or IT support teams responsible for safeguarding valuable company assets efficiently.

Quick Tips:

Final Takeaways

As a founder of AtOnce, I have always been fascinated by the power of data. It's amazing how much information we can gather from various sources and how it can be used to improve our lives. However, the process of collecting and organizing data can be a daunting task. That's where data ingestion comes in. Data ingestion is the process of collecting, processing, and storing data from various sources. It's like a funnel that takes in data from different places and channels it into a single location. This process is crucial for businesses that rely on data to make informed decisions. At AtOnce, we use data ingestion to power our AI writing and customer service tool. Our platform collects data from various sources, including customer interactions, social media, and website analytics. We then process this data using machine learning algorithms to extract insights and patterns. For example, our AI writing tool uses data ingestion to analyze the writing style of a company's existing content. It then uses this information to generate new content that matches the company's tone and voice. This saves businesses time and resources while ensuring that their content is consistent and on-brand. Similarly, our AI customer service tool uses data ingestion to analyze customer interactions across various channels. It then uses this information to provide personalized responses to customers, improving their overall experience. Data ingestion is a powerful tool that can help businesses make better decisions and improve their operations. At AtOnce, we are committed to using this technology to help businesses succeed in today's data-driven world.- Do you find yourself struggling to find the right tone for your brand?

- Are you constantly worried that your writing isn't effective enough to convert readers into customers?

- Do you wish you had a tool that could help you write amazing copy in a matter of minutes?

Imagine being able to write high-quality blog posts, product descriptions, ads, and emails in a matter of minutes.

With AtOnce's AI writing tool, you can do just that.- Our powerful AI algorithms analyze your content and provide suggestions for improving your writing in real-time.

- We offer a variety of templates and frameworks that can help you get started writing right away.

- Our tool even helps you optimize your content for SEO, ensuring that your content gets the visibility it deserves online.

Are you tired of struggling to write effective content that resonates with your target audience?

AtOnce can help:- Save time and money by streamlining your writing process

- Boost your brand's online visibility with optimized content

- Increase your conversion rates with engaging and effective copy

- Get valuable insights into your writing process with our advanced analytics

Take Your Writing to the Next Level With AtOnce

AtOnce's AI writing tool is designed to help you take your writing to the next level:

- Our user-friendly interface makes it simple and easy to use

- Our powerful AI algorithms ensure that your content is always optimized for success

- Our templates and frameworks provide high-quality guidance and inspiration for your writing

The Time to Improve Your Writing is Now

Don't let bad writing hold you back.

Use AtOnce's AI writing tool today and start seeing results.What is data ingestion?

Data ingestion is the process of collecting, importing, and processing data from various sources into a system or database for further analysis and use.

What are some common data ingestion tools in 2023?

Some common data ingestion tools in 2023 include Apache Kafka, AWS Kinesis, Google Cloud Pub/Sub, and Microsoft Azure Event Hubs.

What are some best practices for mastering data ingestion in 2023?

Some best practices for mastering data ingestion in 2023 include understanding the data sources and formats, implementing data validation and cleansing, optimizing data pipelines for performance and scalability, and ensuring data security and compliance.